AI Guides

Will AI Replace Software Engineers?

As artificial intelligence (AI) continues to advance, its impact on various professions, including software engineering, is a hot topic of discussion. Among the tools at the forefront of this technological revolution is ChatGPT, a sophisticated AI developed by OpenAI.

With its ability to generate human-like text, ChatGPT raises questions about the future of software engineers: Will it replace them, or become an invaluable tool in their arsenal?

This article will take a deep into how AI like ChatGPT is being integrated into software development and what it means for the future of the profession. We’ll cover the capabilities of AI in automating tasks, enhancing productivity, and the ongoing need for human expertise in the evolving landscape of technology.

The Impact of AI in Software Engineering

Artificial Intelligence (AI) has significantly transformed the field of software engineering, influencing everything from development processes to the roles of the engineers themselves. The integration of AI, particularly tools like ChatGPT, has led to several key advancements and shifts in how software development is approached.

Automation of Routine Tasks

AI has automated many routine and repetitive tasks in software engineering, such as code generation, testing, and debugging. For example, AI-powered tools can analyze written code and suggest improvements or identify errors before they cause problems. This not only speeds up the development process but also enhances the accuracy and efficiency of the final products.

According to GitHub’s Octoverse report, the use of AI in code completion tools like GitHub Copilot has helped reduce the time developers spend writing code by up to 55% (Source: GitHub Octoverse).

Enhanced Code Quality

AI applications in software engineering have also led to improvements in code quality. By utilizing machine learning models that learn from vast datasets of high-quality code, AI tools can provide recommendations that help developers adhere to best practices and coding standards.

Shift in Skill Set Requirements

As AI takes over more routine coding tasks, there is a shift in the skill set required for software engineers. Developers now need to have a strong understanding of AI and machine learning principles to work effectively with these technologies. This shift is reflected in the changing curricula of computer science courses, which increasingly include AI and machine learning components.

Impact on Job Roles

While there is a common fear that AI might replace software engineering jobs, the reality is more nuanced. AI is changing the nature of these jobs but not necessarily reducing the number of them. Software engineers are needed to design, oversee, and integrate AI systems into existing infrastructures.

Limitations of AI in Software Engineering

While AI has brought significant advancements to the field of software engineering, it also presents several limitations that can impact its effectiveness and application. Understanding these limitations is crucial for effectively integrating AI into software development processes.

Dependence on Quality Data

AI’s performance is heavily reliant on the quality and quantity of the data it is trained on. In software engineering, this means that AI tools can only be as good as the code and data sets used to train them. Poor quality or biased data can lead to inaccurate suggestions or flawed automation processes.

Lack of Contextual Understanding

AI systems, including advanced language models like ChatGPT, often lack a deep contextual understanding of the projects they are used on. While they can generate code and perform tasks based on patterns, they do not understand the business logic or end goals behind a project. This can lead to recommendations that are technically correct but not suitable for specific project requirements or goals.

Difficulty Handling Complex Problem Solving

AI is excellent for addressing well-defined problems with clear parameters, but it struggles with complex problem-solving that requires high levels of creativity and innovation. Software engineering often involves novel challenges that cannot be easily quantified or solved through standard algorithms. In such cases, human intuition and experience are irreplaceable.

Integration Challenge

Incorporating AI into existing software development workflows can be challenging. Compatibility issues between AI tools and existing software platforms can hinder the seamless integration of automated processes. Additionally, there can be a significant learning curve for teams to effectively utilize AI capabilities, requiring time and resources that may not always be available.

Security and Privacy Concerns

As AI tools become more integrated into software development, they also raise concerns about security and privacy. The use of AI for code generation and automation necessitates access to sensitive project data, which can be a target for cyber threats. Ensuring that AI systems are secure and that data used is protected remains a significant challenge.

What Does All This Mean for Software Engineering Jobs?

As Artificial Intelligence (AI) becomes more embedded in software engineering, it is transforming the profession in profound ways. This evolution is not just about changing the tasks that software engineers perform but also about redefining their roles in the tech ecosystem and the broader job market. Here’s a comprehensive look at how AI is reshaping the career landscape for software engineers:

1) Transformation of Job Roles

AI’s integration into software development is shifting the traditional role of software engineers. As routine coding tasks become automated, the focus shifts towards more complex and strategic work. Software engineers are now expected to manage AI systems, integrate AI with existing infrastructures, and continuously update their skills to keep pace with the advancements in AI technology. This shift is less about displacement and more about evolution, where the core responsibilities are becoming more intellectually demanding and creatively fulfilling.

2) Emergence of New Specializations

The rise of AI is creating new niches and specialties within software engineering. Roles such as AI Ethics Officer, AI System Trainer, and AI Integration Specialist are becoming more common. These roles require a deep understanding of both technical and ethical aspects of AI, blending programming skills with an acute awareness of AI’s broader impacts on society.

3) Increased Demand for AI Literacy

As AI technologies permeate various aspects of business operations, there is a growing demand for software engineers who are not only proficient in traditional programming but also fluent in AI and machine learning concepts. This demand is expanding beyond tech companies to sectors like healthcare, finance, and manufacturing, where AI is used to streamline operations and enhance decision-making processes.

4) Need for Lifelong Learning

The rapid pace of AI development necessitates a commitment to continuous learning and professional development. Software engineers must stay abreast of the latest tools, languages, and AI advancements. Educational institutions and professional development programs are adapting to this change by offering specialized courses in AI and machine learning, which are becoming essential for upcoming software engineers.

5) Broader Implications for Employment

While AI introduces new tools and capabilities in software engineering, it also brings challenges, particularly in terms of job displacement in lower-level coding positions. However, this displacement is also accompanied by job creation, particularly in areas that require high-level analytical and managerial skills. The net effect of AI on software engineering employment is complex and multifaceted, suggesting a shift in the types of jobs available rather than a straightforward reduction in job opportunities.

6) Global Impact and Accessibility

AI is democratizing the field of software development by enabling more people to build software through AI-driven code generation tools, lowering the barrier to entry for software development. This has the potential to globalize the field further, spreading job opportunities across different regions and reducing the concentration of jobs in certain tech hubs.

7) Ethical and Societal Considerations:

As AI reshapes the software engineering landscape, it also raises ethical questions that professionals in the field must address. This includes issues like bias in AI algorithms, privacy concerns, and the societal impact of automated systems. Addressing these issues responsibly is becoming a part of the job description for software engineers.

No, AI Won’t Replace Software Engineers

As we’ve explored throughout this article, the integration of Artificial Intelligence (AI) into software engineering is undoubtedly reshaping the landscape of the profession. AI has proven its capability to automate routine tasks, enhance productivity, and even tackle complex problem-solving with efficiency. However, despite these advancements, AI is not poised to replace software engineers in the foreseeable future.

AI excels at specific tasks where patterns and data are abundant, but it lacks the nuanced understanding and creative problem-solving inherent to human intelligence. Software engineers bring critical thinking, strategic insight, and ethical considerations to projects—qualities that AI cannot replicate. Rather than replacing engineers, AI is transforming the role, enabling them to focus on more innovative and complex aspects of software development.

The evolution in the field calls for software engineers to adapt by enhancing their skills in AI and machine learning. This adaptation is not just about staying relevant but also about leveraging AI to create more sophisticated, efficient, and impactful software solutions. As AI continues to develop, it will serve as a powerful tool in the engineer’s toolkit, augmenting human capabilities rather than supplanting them.

In summary, the relationship between AI and software engineering is symbiotic. AI may change how engineers work, but it also opens up new opportunities for innovation and efficiency. The future of software engineering will likely see AI and human intelligence working in tandem, each complementing the other to push the boundaries of what’s possible in technology and beyond.

AI Guides

AI Music Production 2025: Pro Tools & Techniques

Explore cutting-edge AI tools for music production. Compare top solutions for sound design, mixing, and mastering with expert insights and pricing.

AI in Music Production: Beyond Songwriting – The Professional’s Guide to Sound Design, Mixing, and Mastering

While AI-generated melodies and lyrics have dominated headlines, the real revolution in music production is happening in the technical trenches. Today’s AI tools are transforming how professionals approach sound design, mixing, and mastering—offering capabilities that were unimaginable just a few years ago. From neural networks that can separate stems with surgical precision to AI assistants that master tracks to commercial standards, we’re witnessing a fundamental shift in music production workflows.

This deep dive explores how AI is being deployed in professional studios today, evaluates the leading tools in each category, and provides practical insights for producers looking to integrate these technologies into their workflow.

The New Frontier: AI-Powered Sound Design

How Professionals Are Using AI for Sound Design

Modern sound designers are leveraging AI to create entirely new sonic palettes. Rather than simply browsing preset libraries, they’re using neural synthesis to generate unique sounds that have never existed before. Film composers are using AI to analyze reference tracks and generate custom sound effects that perfectly match the emotional tone of a scene. Game developers are implementing procedural audio systems that create dynamic, context-aware soundscapes in real-time.

The key breakthrough has been spectral modeling—AI systems that can understand the fundamental characteristics of sound at a granular level. This allows for transformations that preserve the essential character of a sound while dramatically altering its timbre, texture, or temporal characteristics.

Leading AI Sound Design Tools

| Tool | Primary Function | Key Features | Price Range | Best For |

|---|---|---|---|---|

| Synplant 2 | AI-powered synthesizer | Genopatch technology, sound breeding, DNA manipulation | $149 | Experimental sound designers |

| IRCAM Lab The Snail | Frequency analysis & manipulation | Real-time tuning, spectral analysis, detuning effects | $99 | Precision tuning and spectral work |

| Output Arcade | AI-assisted sample manipulation | Intelligent loop matching, AI-powered effects chains | $10/month | Electronic producers, beat makers |

| Native Instruments Kontour | Phase vocoding synthesis | AI-guided resynthesis, spectral morphing | $199 | Film scoring, ambient production |

| Sonible smart:reverb | Intelligent reverb design | AI frequency shaping, adaptive reverb tails | $129 | Mix engineers, post-production |

Professional Implementation

At Abbey Road Studios, engineers have integrated AI-powered spectral repair tools into their restoration workflow, using machine learning to remove unwanted artifacts from vintage recordings while preserving the original character. Meanwhile, Hans Zimmer’s team has been experimenting with neural synthesis to create otherworldly textures for film scores, training custom models on orchestral recordings to generate hybrid organic-synthetic sounds. According to Music Radar’s coverage of AI in professional studios, major facilities worldwide are reporting efficiency gains of 30-50% in technical tasks while maintaining creative quality.

Mixing Revolution: AI as Your Assistant Engineer

The Current State of AI Mixing

AI mixing tools have evolved from simple preset matchers to sophisticated systems that understand musical context, genre conventions, and psychoacoustic principles. These tools don’t replace the mixing engineer—they augment their capabilities, handling routine tasks and providing intelligent starting points that can be refined with human creativity.

Professional mixing engineers are using AI for:

- Intelligent EQ curve matching and correction

- Automatic gain staging and balance optimization

- Dynamic range management across multiple tracks

- Spatial positioning and stereo field optimization

- Identifying and resolving frequency masking issues

Top AI Mixing Solutions Compared

| Tool | Specialization | Learning Curve | Integration | Price | Unique Advantage |

|---|---|---|---|---|---|

| iZotope Neutron 4 | Complete mixing suite | Moderate | VST/AU/AAX | $399 | Mix Assistant with genre-specific profiles |

| Sonible smart:comp 2 | Intelligent compression | Low | VST/AU/AAX | $129 | Spectral compression with AI guidance |

| FabFilter Pro-Q 3 | AI-assisted EQ | Moderate | VST/AU/AAX | $179 | Intelligent solo feature, collision detection |

| Waves Clarity Vx Pro | Vocal processing | Low | VST/AU/AAX | $149 | Neural network noise removal |

| SSL Native X-EQ 2 | AI-enhanced analog modeling | Low | VST/AU/AAX | $199 | Anti-cramping technology with AI assistance |

Real-World Applications

Mix engineer Andrew Scheps has incorporated AI tools into his workflow for initial balance and EQ decisions, using them to quickly achieve a baseline mix that he then refines with analog gear. Similarly, Sylvia Massy uses AI-powered stem separation to create “impossible” remixes of classic tracks, extracting and reprocessing individual elements that were previously locked in stereo mixes.

Mastering: Where AI Truly Shines

AI Mastering Capabilities

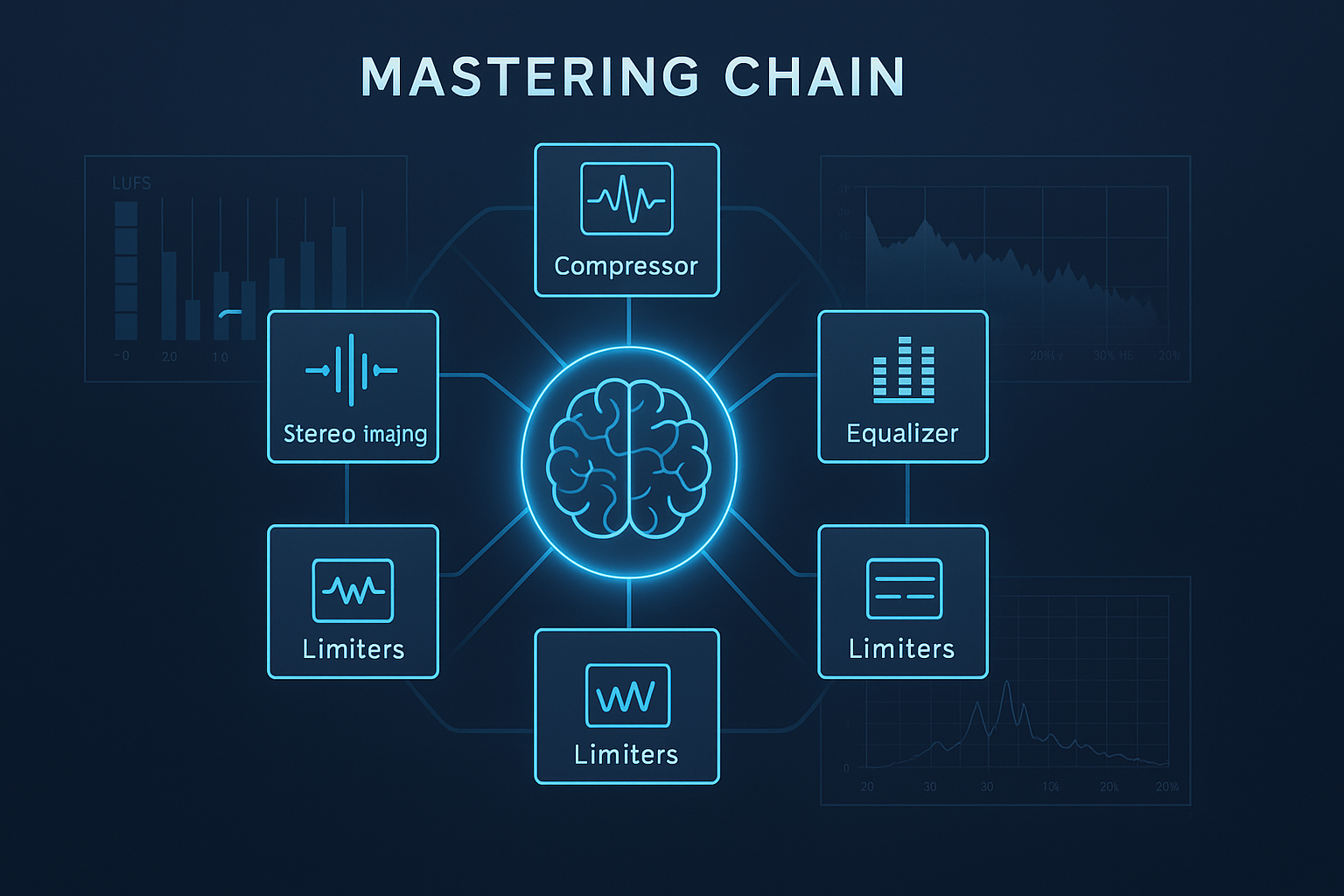

Mastering is perhaps where AI has made the most dramatic impact. Modern AI mastering engines can analyze thousands of reference tracks, understand loudness standards across different platforms, and apply complex chains of processing that adapt to the source material in real-time. These systems consider factors like:

- Genre-specific frequency curves and dynamics

- Platform-specific loudness targets (Spotify, Apple Music, CD, vinyl)

- Codec behavior and lossy compression artifacts

- Perceptual loudness versus measured LUFS (as detailed in the AES Technical Standards)

- Tonal balance across the frequency spectrum

Professional AI Mastering Platforms

| Service/Tool | Processing Type | Turnaround | Customization | Price Model | Professional Features |

|---|---|---|---|---|---|

| LANDR | Cloud-based AI | Instant | High | $25/month unlimited | Reference track matching, stem mastering |

| iZotope Ozone 11 | Local AI-assisted | Real-time | Very High | $499 | Master Assistant, vintage module modeling |

| CloudBounce | Cloud-based AI | 90 seconds | Moderate | $9.90/track | Genre-specific algorithms, multiple formats |

| eMastered | Cloud-based AI | Instant | Moderate | $39/month | Grammy-winning engineer training data |

| Waves L3-LL Multimaximizer | Local AI-enhanced | Real-time | High | $299 | PLMixer technology, intelligent release control |

| Plugin Alliance ADPTR Master Suite | Local AI-assisted | Real-time | Very High | $199 | Perceptual loudness matching, streaming prep |

Case Studies from the Industry

Abbey Road Studios has begun offering AI-enhanced mastering services where their engineers work in tandem with machine learning systems to achieve optimal results faster than traditional methods. The AI handles the technical optimization while engineers focus on creative decisions and quality control.

Grammy-winning mastering engineer Emily Lazar has integrated AI tools into her workflow at The Lodge, using them for A/B comparisons and to quickly generate multiple master variations for client review. She reports that AI has reduced technical setup time by 40%, allowing more focus on creative refinement.

The Integration Challenge: Workflow Considerations

Building an AI-Enhanced Studio

Successfully integrating AI into professional workflows requires careful consideration of:

- Processing Power: Many AI tools require significant CPU/GPU resources

- Latency Management: Real-time AI processing can introduce latency

- Training and Adaptation: Time investment to understand AI behavior

- Quality Control: Maintaining critical listening despite automation

- Client Education: Explaining AI’s role in the creative process

Hybrid Approaches

The most successful implementations combine AI efficiency with human creativity. For example:

- Using AI for initial rough mixes, then refining with traditional tools

- Applying AI mastering as a reference point before final human adjustments

- Leveraging AI for technical tasks while maintaining creative control

- Implementing AI for quality control and consistency checking

Looking Ahead: The Next 18 Months

Emerging Technologies

Several breakthrough technologies are on the horizon:

- Neural Audio Codecs: AI compression that maintains quality at extremely low bitrates

- Real-time Style Transfer: Apply the mixing style of famous engineers to any track

- Contextual Processing: AI that understands musical structure and adjusts processing accordingly

- Collaborative AI: Systems that learn from your decisions and adapt to your style

- Spatial Audio AI: Intelligent Dolby Atmos and binaural mixing assistants

Industry Predictions

Based on conversations with leading developers and producers:

- 60% of commercial releases will use some form of AI processing by 2026

- AI will become standard in broadcast and streaming platform compliance

- Custom-trained AI models will become a differentiator for top studios

- Real-time AI processing will eliminate the need for rendering in many workflows

Practical Recommendations

For Beginners

Start with cloud-based services like LANDR or eMastered to understand AI’s capabilities without significant investment. Focus on using AI as a learning tool—analyze what changes the AI makes and why.

For Intermediate Producers

Invest in one comprehensive suite (like iZotope’s Music Production Suite) and thoroughly explore its AI features. Use AI for technical tasks while maintaining creative control over artistic decisions.

For Professionals

Integrate AI tools strategically for efficiency gains. Consider training custom models on your signature sound. Use AI for rapid prototyping and client previews while maintaining traditional workflows for final delivery.

The Bottom Line: Augmentation, Not Replacement

AI in music production has evolved far beyond novelty. Today’s tools offer genuine value to professionals, handling technical complexity while preserving creative freedom. The key isn’t choosing between AI and traditional methods—it’s understanding how to leverage both for optimal results.

As we move forward, the most successful producers won’t be those who resist AI or those who rely on it entirely, but those who thoughtfully integrate these tools into their creative process. The future of music production isn’t about replacement—it’s about augmentation, efficiency, and pushing creative boundaries further than ever before.

The revolution isn’t coming. It’s here. The question is: how will you use it to enhance your unique creative voice?

Have you integrated AI into your production workflow? Share your experiences in the comments below, and let us know which tools have made the biggest impact on your creative process.

AI Guides

History of AI – From the 1950s to Present

Artificial Intelligence (AI) might seem like a concept straight out of a modern sci-fi movie that entered our lives in the past couple of years, but did you know that the idea has been around for centuries?

In this article, we’ll dive into the history of AI, tracing its origins and major milestones. Continue reading to the end to discover how AI has evolved through history.

The Early Imaginings and Theoretical Foundations

Long before the term “artificial intelligence” was coined, humans dreamed of creating intelligent machines. Ancient myths and stories from various cultures feature mechanical beings endowed with intelligence, showcasing early human fascination with mimicking life through machinery. For instance, in Greek mythology, Hephaestus, the god of craftsmanship, created mechanical servants.

Fast forward to the 17th and 18th centuries during the Enlightenment, when philosophers and mathematicians like René Descartes and Gottfried Wilhelm Leibniz began pondering the possibility of machines thinking like humans. They discussed the human mind in terms of a complex machine, laying the groundwork for computational thinking.

The Formal Birth of AI (1950s – 1960s)

The actual term “Artificial Intelligence” was first used in 1956 by John McCarthy, a young assistant professor at Dartmouth College, who organized a pivotal conference – now considered the birth of AI as a field of study.

This event, known as the Dartmouth Summer Research Project on Artificial Intelligence, brought together researchers interested in neural networks, the study of intelligence, and the possibility of replicating human thought in machines.

During this era, AI research received significant attention and funding. Early successes included programs that could perform algebraic equations and play checkers at a high level. These developments led to optimistic predictions about AI’s future, setting high expectations for rapid progress.

The First AI Winter (1970s)

Despite early enthusiasm, progress in AI research did not keep pace with expectations. By the mid-1970s, the limitations of existing AI technology became apparent, leading to the first AI Winter, a period marked by reduced funding and waning interest in AI research. This downturn was largely due to the overly ambitious expectations that could not be met by the technology of the time, which struggled with real-world applications.

The Resurgence and Rise of Machine Learning (1980s – 1990s)

AI experienced a resurgence in the 1980s, thanks in part to the adoption of machine learning. Instead of trying to directly encode AI with extensive knowledge and rules about the world, researchers focused on creating algorithms that could learn from data.

This shift was significant, leading to more robust and adaptable AI systems. The introduction of backpropagation by researchers such as Geoffrey Hinton allowed neural networks to learn from their errors, improving their performance over time.

During this period, governments and industries began investing heavily in AI again, intrigued by its potential applications. AI started to be used for logistics, data management, and within expert systems in fields like medicine and engineering, marking its transition from a purely academic pursuit to a practical tool in business and other areas.

By the late 1990s, the internet boom provided AI researchers with unprecedented amounts of data and a new platform to deploy AI technologies. This period led to significant advancements in algorithms and the capability of AI systems to handle tasks involving big data, marking another turning point in the AI development timeline.

As we continue exploring the evolution of AI, we will see how the 21st century brought AI into our daily lives, making it an indispensable tool in various sectors, from healthcare to entertainment. Stay tuned as we uncover more about how AI continues to evolve and shape our world in ways we could hardly imagine just a few decades ago.

AI in the 21st Century: Expansion into Daily Life and Beyond

As the new millennium unfolded, AI’s integration into daily life and various sectors accelerated at an unprecedented pace. The development of sophisticated machine learning models, particularly Deep Learning, has enabled AI to analyze and generate large volumes of data with astonishing accuracy.

This section of our journey through the history of artificial intelligence will explore how AI has become a ubiquitous part of modern life.

Deep Learning and Big Data

The 2000s witnessed a major breakthrough with the advent of deep learning techniques, which involve neural networks with many layers that can learn increasingly abstract features of data. These networks were fueled by the explosive growth of “big data” generated by the digital activities of businesses and consumers alike.

Companies like Google, Amazon, and Facebook began using deep learning to improve products and services, from enhancing search algorithms to personalizing advertisements, thereby making AI an integral part of the tech industry’s infrastructure.

AI in Consumer Technology

Perhaps the most relatable example of AI for most people is its role in consumer technology. Virtual assistants like Apple’s Siri, Amazon’s Alexa, and Google Assistant use AI to understand and respond to voice commands, providing users with information, entertainment, and assistance with daily tasks.

The seamless integration of AI into smartphones and home devices has dramatically changed how people interact with technology, making AI a helpful companion in our everyday lives.

Autonomous Vehicles

Another significant area of AI development is in autonomous vehicles. Companies like Tesla, Waymo, and Uber have invested heavily in AI systems that can safely navigate roads without human intervention. These vehicles use AI to process inputs from various sensors and cameras, making split-second decisions that can adapt to complex traffic environments and driving conditions.

AI in Healthcare

AI’s impact on healthcare has been profound, offering tools for diagnosis, personalized medicine, and patient management. AI algorithms can analyze medical images with accuracy that matches or exceeds human radiologists.

Additionally, AI is used to predict patient outcomes, personalize treatment plans, and manage healthcare records more efficiently, significantly improving the quality of care and operational efficiencies in healthcare facilities.

How AI Continues to Shape Our Future

The journey of AI from a concept in myths to a key player in major industries shows its vast potential and inevitable growth. As AI technology continues to evolve, its capabilities will likely become more sophisticated, leading to even more innovative applications across different sectors.

Ethical Considerations and Future Challenges

However, the rapid growth of AI also brings challenges, particularly ethical considerations like privacy, security, and the impact of automation on employment. The future of AI will likely focus not only on technological advancements but also on addressing these ethical issues, ensuring that AI benefits society as a whole.

The Road Ahead

Looking forward, the integration of AI in more complex tasks and its potential to understand human emotions and make morally significant decisions are areas of intense research and interest. The journey of AI is far from over; it is evolving every day, promising a future where AI and humans coexist, complementing each other’s capabilities.

Conclusion

The history of artificial intelligence is a fascinating tale of human ingenuity and technological advancement. From early automata to sophisticated AI that permeates every aspect of our lives, AI’s journey is a testament to the relentless pursuit of knowledge and understanding by scientists, engineers, and thinkers across generations.

As we stand on the shoulders of these pioneers, we look forward to a future where AI continues to enhance our abilities and enrich our lives.

AI Guides

Why Can’t AI Art Make Hands

Artificial Intelligence (AI) has made significant strides in many fields, and art creation is no exception. AI art generators, like those powered by machine learning models such as DALL-E or GANs (Generative Adversarial Networks), can create stunning images that dazzle the imagination.

These tools are used for everything from generating abstract art for digital spaces to crafting backgrounds for games and virtual realities. Despite their capabilities, these AI systems often struggle with a peculiar challenge: drawing human hands accurately.

Our article will explain why AI art generators frequently produce hands that look awkward, distorted, or downright eerie, and why hands are a particularly tough challenge for AI. Read on to know why AI finds hands so difficult to get right, and why this matters more than you think for the future of AI-generated art.

Why AI Struggles With Generating Human Hands

Human hands are one of the most complex and detailed parts of the body, involving a wide range of motions and configurations that can express a multitude of gestures and actions. This complexity presents a significant challenge for AI image generators, not just for one but for several reasons.

Below, you’ll find why AI art generators struggle with drawing hands.

High Variability

Hands are highly variable in their appearance and position. They can interact with numerous objects, appear in countless poses, and each hand gesture can convey different emotions or actions.

For AI, which learns from a dataset of existing images, the immense variability of hand positions and their interactions with other objects can lead to a lack of comprehensive learning material. As a result, the AI often struggles to accurately recreate hand positions that it hasn’t encountered frequently in its training set.

Intricate Detailing

The structure of a hand is intricate, with fine detailing in the knuckles, nails, and skin texture. Each of these details needs to be rendered accurately for a hand to look realistic.

AI systems typically generate images based on patterns they have learned from data; if the details in the training images are not diverse or detailed enough, the AI will have difficulty replicating them accurately. This often results in hands that look flat, malformed, or overly simplified.

Complex Interactions

Hands are rarely seen in isolation; they are usually interacting with objects or other parts of the body. This interaction adds a layer of complexity to the image generation process.

AI must not only generate the hand but also understand and replicate how it interacts with its environment. This requires an understanding of physics, space, and object dynamics, which are challenging for AI to learn completely.

Data Limitations

The quality of the data used to train AI significantly impacts its output. If the dataset is not diverse enough or lacks high-quality images of hands in various poses and interactions, the AI will struggle to generate high-quality images of hands.

Moreover, biased or insufficient training data can lead to repetitive errors, such as consistently generating an incorrect number of fingers or unrealistic hand shapes.

Other Parts of the Human Body AI Struggles to Generate

While AI’s difficulties with generating realistic human hands are well-documented, this challenge extends to other complex parts of the human body as well. Features such as faces, feet, and hair also present significant hurdles for AI image generators.

The reasons for these struggles often overlap with some of those seen in hand generation. Let’s explore why AI particularly struggles with these features.

Faces

The human face is a centerpiece of identity and expression, involving subtle micro-expressions that convey a wide range of emotions, from joy to sorrow. AI often struggles to replicate these nuances for several reasons:

- Complexity of Expressions: Human expressions involve small, often rapid changes in facial muscles. AI systems find it challenging to capture these nuances accurately because they require an understanding of how muscles interact and how expressions change dynamically over time.

- Symmetry and Proportions: Human faces have a specific symmetry and proportion that can be difficult for AI to replicate accurately. Even slight deviations in symmetry or proportions can make a face look unnatural or unsettling.

- Eye Detailing: The eyes are particularly expressive and detailed parts of the face. AI systems often struggle to render the depth and sparkle of human eyes, which are critical for a face to appear lifelike and relatable.

Feet

Like hands, feet are complex structures that involve many small bones, joints, and types of movements. AI struggles with feet for similar reasons:

- Variability in Position: Feet can appear in numerous positions depending on the body’s actions, such as standing, running, or resting. Capturing these positions accurately, along with the associated shadows and textures, is challenging for AI.

- Interaction with Surfaces: Feet often interact with various surfaces, which can affect their appearance. AI must understand and replicate these interactions, such as the flattening of the soles when standing or the arching of the toes when walking, which is a complex task.

Hair

Hair presents another significant challenge for AI due to its fluid and dynamic nature:

- Texture and Flow: Hair has different textures and styles that can change with movement and environmental conditions, such as wind or humidity. AI systems often struggle to generate hair that looks natural and flows realistically.

- Volume and Light Interaction: Accurately rendering how hair volumes interact with light and shadow is complex. Hair also has varying degrees of transparency and reflectivity, which are difficult for AI to replicate, often resulting in hair that looks either too heavy or too light.

All of these features require a deep understanding of human anatomy, the physics of light and materials, and the subtleties of human expression, all of which are areas where AI still has room for improvement.

As AI technology evolves, the ability to handle these complex human features with greater accuracy will continue to grow, driven by advances in machine learning models, increased computational power, and more extensive training datasets.

These improvements will help AI overcome its current limitations, allowing for more realistic and nuanced representations of human features in digital art and other applications.

How to Help AI Get Human Features Right

If you’re using AI and tired of it not getting parts of the human body right, there are a few things you can do to fix this – or at least make it easier for the AI to generate better-looking images.

Here are several practical steps that can help improve the accuracy of AI-generated human features:

Use High-Quality, Detailed Images

The quality of images used in training datasets significantly impacts AI’s output. High-resolution images that show detailed features of hands, facial expressions, and interactions can provide the AI with a better understanding of subtle details. This is particularly crucial for intricate parts like the texturing of skin, the way light plays on muscle, or the specifics of hand positioning.

Implement Advanced Modeling Techniques

Employing advanced neural network models that focus on depth and texture can aid in generating more realistic human features. Techniques such as Generative Adversarial Networks (GANs) have been particularly successful in creating photorealistic images. These models learn to simulate fine details more accurately by pitting two neural networks against each other: one generates images; the other evaluates their realism.

Community Feedback

The AI development community can be a tremendous resource. Platforms like Reddit often feature discussions where users share their experiences with different AI tools, providing insights into common issues and potential solutions. By engaging with these communities, you can find solutions to your common problems with AI-generated images.

AI Is Getting Better at Generating Images Every Day

Despite the current challenges, AI technology is improving rapidly, and the quality of images it can generate is getting better every day. Developers are continually working on refining AI algorithms, expanding training datasets, and incorporating user feedback into the development process. These efforts are gradually overcoming the difficulties AI faces with complex human features like hands, faces, and hair.

Several AI tools are already making significant strides in this area. For instance, newer versions of AI image generators have begun to show improved capability in handling human anatomy with greater accuracy. These advancements suggest a promising future where AI can not only match but potentially exceed human capabilities in creating detailed, realistic images.

As AI continues to evolve, it holds the potential to transform artistic creation, offering tools that augment human creativity with digital precision. For artists, designers, and creators, these developments signal exciting new possibilities for collaboration between human imagination and AI efficiency, opening up a world of creative opportunities that were once thought impossible.

-

AI Guides2 years ago

AI Guides2 years agoGPT-4 vs. GPT-4o: Key Differences

-

Uncategorized5 months ago

10 Best AI Productivity Tools for 2025 [Tested]

-

AI Tool Reviews2 years ago

AI Tool Reviews2 years ago10 Best Free AI Websites

-

AI Guides2 years ago

AI Guides2 years agoHistory of AI – From the 1950s to Present

-

AI for Entertainment2 years ago

AI for Entertainment2 years agoAI Predicts for Super Bowl Winners 2024 to 2099 – Surprising Results

-

AI for Investment2 years ago

AI for Investment2 years agoHow to Use AI for Stock Trading

-

AI Guides2 years ago

AI Guides2 years agoCurrent Limitations of Artificial Intelligence

-

AI Tool Reviews2 years ago

AI Tool Reviews2 years ago10 Best AI Song Generators (Including Free Options)